The Move

As everyone knows now, Shadowserver had a bit of a funding issue earlier this year which caused us to go through the process of needing a new space for our data operations. A place to call home for all that storage and computing that we do daily. A new data center was required. This story will go through that recent history, the actual move, and a few after action and post move things that occurred. This blog will be partially serious, some tongue in cheek, and some sad comedy, so enjoy our journey.

Just a warning now – this is a very, very long blog post including a lot of time, pictures, videos, and notes. Go get a coffee or two, your favorite alcoholic beverage, and a nice comfy chair before you start down this reading path.

T-273 Days (~2019-11-15)

- freed0 – Hey, what a nice space we have here in San Jose. It is just so lovely. It would really suck if we had to move

- Chief Architect – You remember that I promised to quit if we moved again.

- freed0 – What are you talking about? Move again? We should be in here for at least another DECADE!

- Chief Architect – Sigh, what happened?

- freed0 – Well….. Our current supporter has decided that they will no longer be hosting us. And we cannot tell anyone until they actually announce letting us go.

- Chief Architect – Hmm, how much time do we have?

- freed0 – Donno… Might be this year, might be next year, the details of exactly when is not being shared. But this means we need to spin up everything and start getting quotes and looking for a new home.

- Chief Architect – What is our budget?

- freed0 – Donno… We are broke right now, our sponsor will not be giving us any more funds, so we are solo.

- Chief Architect – So you are telling me, we need to find a new home without a budget, without a firm plan, without sponsors, without any details?

Well, yes. It was a difficult time in the beginning trying to figure out what we actually needed. Our current data center was mighty and massive:

- Secure site in San Jose, California

- Went live November 2017

- 3623 square feet + Office space

- $3M build cost

- 92 + 12 = 104 racks (plus more co-lo)

- State of the art hot/cold aisle sealed “pods”

- 750 KW power (325KW in use)

While we had 92 “live” racks, most were not at full capacity. We had to first look at what was reasonable for power per rack and look at a firm consolidation plan on condensing our footprint to be as small as possible but still give us a year or two of growth. This had to happen before we could even reach out to any of the colo vendors.

T-151 Days (2020-03-16)

- freed0 – I have some good news and bad news

- Chief Architect – Stop playing games and just tell me what is happening.

- freed0 – We now have a date on when we need to be out of our current facility and an update on our finances.

- Chief Architect – Cool, how much time do we have?

- freed0 – The facility must be emptied out by the end of May.

- Chief Architect – What? I thought we had until August?

- freed0 – HAHAHAH, it would take a pandemic to allow that to happen!

- Chief Architect – Sigh, what is our funding outlook right now?

- freed0 – DEAD BROKE!

- Chief Architect – EH?

- freed0 – But we have some excellent perspectives and we know that the community will rise to our aid, singing psalms about us, holding us up in the air in celebration…

- Chief Architect – Then what are our plans?

- freed0 – Reach out to co-location providers with our current requirements. Squeeze them for discounts, look for limited growth, and long term contracts. We need a place we can survive in. We also need to come up with alternative plans, such as severely limited operations, complete shutdown, and everything in between.

- Chief Architect – We have been working the details of most of that since November, so that is easy. We have a plan for consolidating the current number of racks that we can now start executing on. What do I tell our guys?

- freed0 – I’ll be in San Jose and inform everyone directly. We better have a lot of hard alcohol available. Once I inform the team we will be very short handed.

- Chief Architect – Like I have a life now

- freed0 – No holidays or vacations until after we move!

This was a big day when we were finally able to be public with what has been happening and the impact to Shadowserver. We announced our funding change. We had some severe US changes to our headcount and support. We needed to consolidate our rack numbers down to 50 from 104. It would make for some crowded overpowered racks but we needed to see what was the smallest space we could fit into.

T-127 Days (2020-04-09)

- freed0 – We have a new date for the move!

- Chief Architect – Really? It was getting pretty close.

- freed0 – Looks like it really was a pandemic and our provider stepped up and extended not only our employment, but is allowing us to stay in the facility until the end of August.

- Chief Architect – Great. What do we do with all the quotes and spinning up of providers?

- freed0 – Reach out and apologize and let them know that there has been a change of plans. Maybe some new vendors will step up and help out.

- Chief Architect – I hate calling people…

After discussions with our provider about the potential health risk to staff and to movers, we are happy to announce that their new Chief Security & Trust Officer, Brad Arkin, had kindly extended our data center move deadline from May 26th to August 31st. Shadowserver must still pick up the employment costs for our US-based operations team from May 26th, but this data center migration deadline extension comes as a great relief to everyone.

T-114 Days (2020-04-22)

While we have been reaching out to the vendors we had been working with we also put out the public word that we were nearing the end of our vendor search and anyone that wanted to step up, this was the time.

Requirements

We had very specific requirements:

- Number of customer provided racks: 62 (50 powered)

- Power per rack: 2 x 208VAC 3-phase (L15-30)

- Raritan PX3-5665V-C5 PDU (customer provided)

- Initial load of 6.5kW per rack / 323kW total – asking for 350kW of capacity

- Structured cabling:

- 4 x 10Gb 50/125um OM3 LC/LC to each rack

- Wired to a central rack (8 fibers per rack x 49 racks)

- Internet Uplinks: 2 x 10Gb

- 2 x IPv4 /24 per uplink

- 1 x IPv6 /64 per uplink

- Security:

- Racks must be physically separate (caged)

- Does not require ceiling cage cover

- Office space: minimum 200 square feet

- Storage Space: Would like to have on site of 200-400 square feet

- Loading dock: height must be 48″-55″ or provide surface mount dock lift

- Preferred Locations:

- CA:San Jose area

- CA:Sacramento area

- OR:Portland area

- Move in date: 2020-08-15

T-89 Days (2020-05-17)

It was a long and hard path to the final decision. A lot of very friendly organizations came up and tried to fit in with our needs with a range of options. There were many offered but we could take only one.

Criteria for Decision

Besides the requirements we weighted the areas for each of the vendors in the following fashion:

- Requirements: Needing to meet all the requirements set forth was a given but a common area where several of the providers failed was their loading dock. A couple did not have one at all, and at least one had a short one. Using a forklift to move the racks off of the moving trucks was going to be a perilous activity that we were not willing to partake of. Our racks range from 1500 lbs to 1900 lbs (680 kg to 860 kg) in weight each while loaded with gear. They are barely able to roll at that weight and to try to lift and move with a forklift? We deducted severely for all the failure areas.

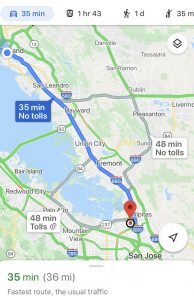

- 65% – Cost over 36-months: Most of the quotes that were submitted were for 36-months, and some to increase the discounts also included 60-month quotes. Since we are now using community funding we need to make sure that we get the best possible deal for our new space. We did not care if it was a little old, ugly, needed a paint job, or had that odd lingering smell. But we had to be very cost conscious.

- 20% – Location: Our most wanted choice was to keep the data center in the California Bay Area followed by Sacramento California, and Portland Oregon. We did get a few quotes for the Phoenix Arizona area, and while those were the least expensive, they would have increased move costs a large amount to the point where they costs would end up being very close to other quotes from locations closer to where we already were. The further away from the Bay Area the more we discounted the vendor.

- 10% – Providers: This ended up being more important as a differentiator between similar providers. We have two other colo locations in the Bay Area that we would like to eventually combine in the new data center space. Since we plan on looking at more cloud providers in the future, being able to have direct network connections in the colo to those cloud providers was also very important.

- 2% – Office: We needed some sort of minimum office space since we would not have one for the foreseeable future. The system administrators needed some space to work that was not in the data center itself.

- 2% – Storage: We still have a fair amount of spares and other parts that we would prefer to keep stored somewhat close to where all the racks of gear are. While we could consider offsite storage, that would quickly become unwieldy.

- 1% – Post Contract Cost: The lowest consideration, but we did not want the price to balloon up at the end of the contract forcing us to move unless it was really necessary. The annual increases ranged from 3% to 10%. Even with steep initial discounts, this could easily balloon up the long term cost quickly.

There were also several other considerations, but were custom to each provider. If we were just another occupant, if they wanted partnership, or even if there was more to the relationship. This caused different changes to the base weights that we had.

Quotes and more Quotes

What we did not expect was the number of quotes we would get from the same vendors repeatedly. We were getting new quotes from the same set of vendors all the way up until the last day they were due, and then a few afterwards. All of them wanted a second chance to bid again if they lost. But a final decision was made and now we will be moving forward.

Our final choice was Digital Realty at their Oakland data center location. The space was nice, it was still in the Bay Area, and met all of our requirements. They had the second most number of carriers at 62 which was one of our sticking points for many of the other locations and vendors. It will also be possible to get a network connection to our other colo locations so that we can more easily migrate our other gear to a single location.

The overall location has three floors spanning 165,000 sq ft (15,300 sq m). With over 14mW of power they have plenty of capacity for our small amount of gear as well as UPS and generator backups. Something we have never had previously. Most of our power outages have been short ones caused by the power company or slippery fingered electricians. We should see a nice increase of uptime or lack of downtime once we move.

We had a lot of good, excited, and aggressive vendors that we looked at. It was a difficult choice that went up to the last minute. It was an interesting process that I am happy we do not have to repeat for another five years.

The Shadowserver Data Center

We have talked about our spaces before and that we have moved and moved a couple of times before. We have provided some nice pictures of our data centers a few times, and we will provide another set about the move and the final look.

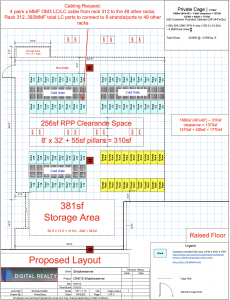

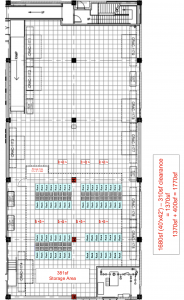

Our new layout is much more simple than the previous one. We have 50 “hot” racks that will start off powered up and 18 “dead” racks that will not have any power at all. At a later date we can power up these other racks for some additional installation and sustaining costs. You can see that we will be able to have our storage area within the cage. We will have room for both of our lab benches and all of our spares. It will be very nice having everything in one place and not have to transport it as we need those parts.

Cable trays will be installed in the ceiling that will follow along the back of all the racks along the hot aisles. A cross connection of the cable trays will be installed through the middle of the room. Here are some of the preliminary pictures of the space. This picture is looking into the future storage space. None of the fencing is up, but they will be installing it fairly soon now that we have signed the contract.

The second picture is the space on the far right of where our cage will be.

Here is an overall picture of the area where our cage will be installed. The entire bay is empty at this time and we are only occupying a portion of it. So if you want to be our colo neighbor let me know.

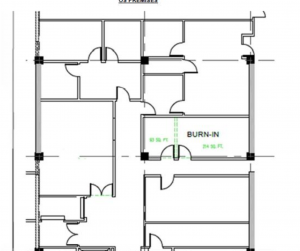

We also secured an office space for our team to work in when not needing to be in the data center itself. It is a thin narrow office, but sufficiently large for us to put all the desks we have within it.

In this picture it looks more like a hallway than an office, but it is. The floor plan shows a set of doors near the middle of the room, but the reality is that it has a set of double doors at the end of the room.

T-60 Days (2020-06-29)

Construction has started and preparation at the new facility. While presented all at once, they were completing the installation all the way up to the week before the move.

Here they are working on the electrical for all of our racks.

The planned fiber and ladder trays will be mounted on top of all the racks once they arrive, but to get the trays installed now they had to put in vertical braces to hold it up until the racks arrived:

And here are the installed fiber trays:

And now the fence:

T-14 Days (2020-07-31)

Just a two week countdown until our move. While we have been warning people that everything will be coming down, everything now is pretty much locked to the schedule. We announce our planned outage. There is no going back at this point.

T-1 Day (2020-08-13)

Final day of preparation and last minute cleaning. We have made sure everything is properly labeled so that we know what order the racks should be taken out as well as what order they needed to be installed and in which locations. Our System Administration team has been working crazy hours for several weeks getting ready for this. We have a pandemic, we will be moving almost 120,000 lbs of gear and racks, and we have one chance to get this right.

T+0 Days (2020-08-14)

Early in the morning the team unbolted all the racks and started the massive shutdown of everything. Besides the brief power outages, we have never had this space so silent since we moved in. It was a moment of mourning as we left not only the space we had occupied for over two years, but 16 years of hosting at Cisco and in my own case, 22 years of employment. The doors all had to come off and be moved separately. This was to protect the doors as well as to speed up the process of getting all the racks bolted down. The racks cannot be powered until they are all bolted down and grounded.

We carefully wrapped up all the doors so we would have them in good condition for installing at our new location:

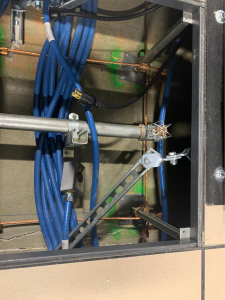

Things you find under the floor in our old space. It seems that the electricians were not as meticulous as we would have liked:

While attempting to unbolt the racks, some of the bolts had been stripped upon install. This was not a one time thing. There were several that were poorly installed and nearly impossible to remove:

Final look at our office that we worked in for the last two and a half years:

T+1 Day (2020-08-15)

Today the racks and gear will start being moved. Most of the office furniture will be moved tomorrow so we can focus on trying to get the data center installed and running as quickly as possible. First, the snack prep:

The new space is all prepped and ready to go:

The moving trucks have arrived and are ready to go. We had six trucks in total and over 20 movers:

The gear is all lined up to be moved. We had carefully labeled all the racks so that they would be taken in a specific order and on and off the trucks in that order. This way we could maximize our efficiency when we moved into the new space:

The first load of gear finally left the facility:

And the rest of the gear was moved that was not rack mounted:

The gear slowly arriving and being installed in our new location:

The complete disarray of racks as they arrived:

And we cannot forget the doors:

And dumping all the gear and miscellaneous stuff that we do not need:

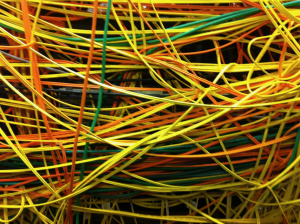

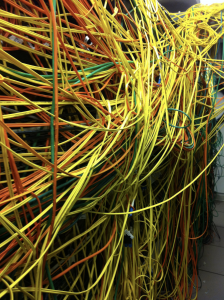

Some reminiscing of the old wiring rooms we had to deal with sometime at the previous facility. It was interesting when we had to find a cross-connect in this room. More difficult was when we had to come back a year later and find it to troubleshoot a connection issue:

In the end the movers were able to take 16 truck trips today. We estimate there should not be more than a total of six truck trips tomorrow. We were limited by a combination of maximum weight that each truck could carry as well as the insurance amount per truck. Each truck was insured for $2M USD.

While we were willing to play the odds on packing the trucks, the movers were not. They were very careful and safety first for the move. These racks weighed between 1500 lbs and 1900 lbs each. Not something I wanted to push along a hallway.

T+2 Days (2020-08-16)

A look at our new facility from the outside:

As the gear is moved in and we start sorting everything:

All of our small spares and cables.

T+3 Days (2020-08-17)

After several days of effort, we are finally finished with the old space. We were in here for two and a half years. It was a great space and the largest that we have ever had, and probably the largest we will ever have:

Video tour of the new space:

Our new office space:

Our old data center emptied out:

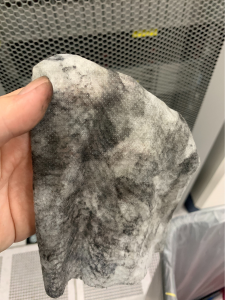

We seemed to have been a little lax in our cleaning:

T+4 Days (2020-08-18)

It appears that our carrier was unable to find the 24/7 security to enter the colo yesterday to install our line, and now will be back around after 1600 PDT, maybe. No Internet but everything else is powering up so far. The giant PDU’s outside our building:

And generators:

Fuel tanks:

Did not think that the biggest hurdle to finishing was waiting for the one minute cross-connect from the carrier. Especially since they had a deadline of yesterday. End of Day Five – We are up and running including Internet connectivity. Services will resume tomorrow, maybe reports on Thursday. Note that the team is still on the hamster wheel of repairs for the rest of tonight and tomorrow.

A view from our roof:

And in case you were curious, from the street:

T+5 Days (2020-08-19)

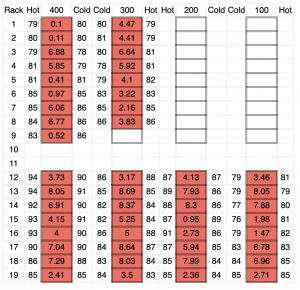

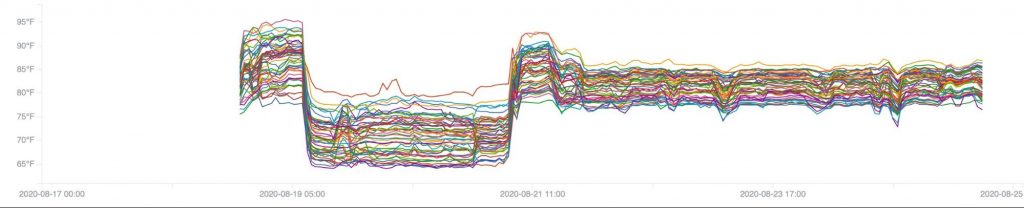

Everything has been moved in, bolted down, and powered up. We need to re-balance a bunch of the clusters as well as getting the temperature set for all the racks. You can see here what it looked like once it was all powered up. The number outside the boxes are the temperatures in Fahrenheit and the number inside the box is the amount of kW being used by that rack.:

We expect it to take us at least a week of balancing to get the temperatures to a level that will work for us. We might move some of the gear around as well as changing our the air-flow tiles for high capacity ones where needed.

We did get our office put together finally:

And all the doors mounted:

T+11 Days (2020-08-25)

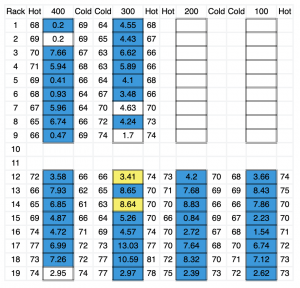

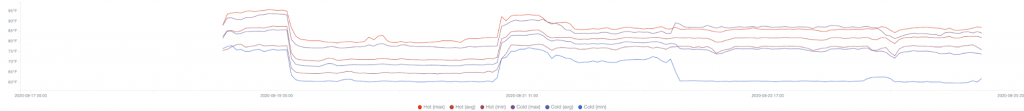

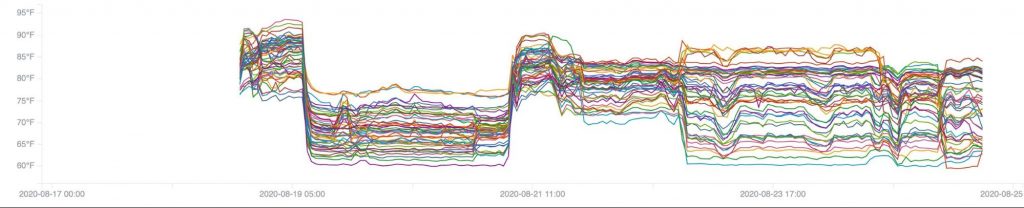

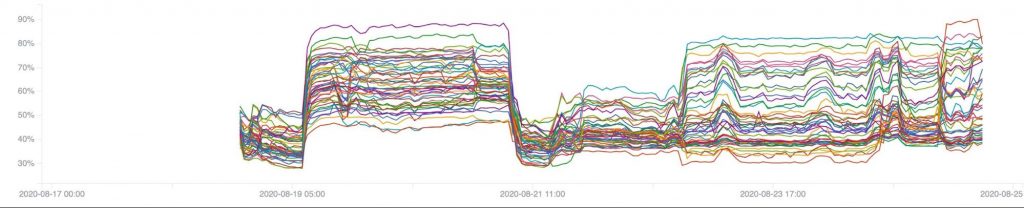

For the last week besides bringing up all of our services we have been working with the data center to get a proper balance of cooling. We have a temperature/humidity sensor on every rack. They were all mounted on top of the racks but have since moved lower, most mid-rack. Getting the sensors closer to the floor will better represent the “intake” temperature for the gear. So higher temperatures are expected as we fine tune locations and the air flow.

Here are the Hot Aisle Temperatures:

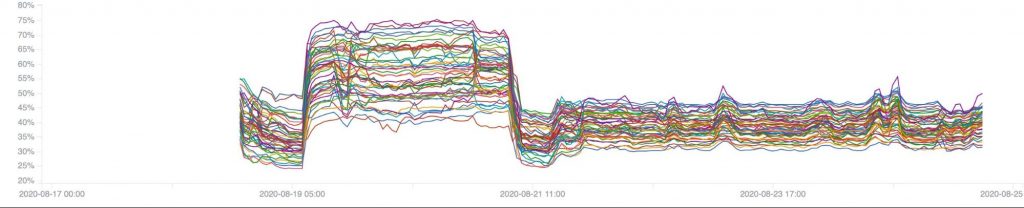

And the Hot Aisle Humidity:

Cold Aisle Temperatures:

And finally Cold Aisle Humidity:

A look at the different air-flow tiles:

T+45 Days (2020-09-30)

We can finally almost completely empty our other Bay Area colo’s. One of the cost savings that we had planned for was to consolidate as much of our gear in our new data center location. This also drove one of the reasons why we chose which vendor. We needed to be able to migrate all the networks from the old colo’s to the new one. We wanted to have an easy cross-connection and not have to tunnel back to where we had the old gear.

T+58 Days (2020-10-12)

Everything is pretty much in place now. We have shut down our other two colo locations and migrated both the gear and networks to the main data center. The temperatures have settled out. Our funding for 2020 was achieved.

Conclusion

- freed0 – Wow, that was a lot of work.

- Chief Architect – Hmm, didn’t you stay home in Oregon and let us do everything?

- freed0 – … I was there in spirit! The pandemic! Fake News! Umm, well, yeah. You guys did an awesome job. It was a hellish schedule, difficult work, and painful for everyone. You guys deserve all the kudos for this effort. It was magnificent.

And here we are. We have five years in this new location, and that could easily extend depending on the future for all of us.

What can you do to Help us?

Our work has not ended, it only continues to increase. The criminals are not resting. The Internet grows and the load to help secure it does accordingly. While we reached our 2020 funding goals, we now need to focus on the 2021 goals. We saved Shadowserver this year, but we still need to build that sustaining model to support it for the next couple of decades. We are still in need of all of your assistance and sponsorships.

The fight has not ended and this is our community that we all need to work in.